I’m fortunate enough to live in San Francisco which meant when Node Knockout rolled around I spent it hacking in Joyent’s HQ on the 20th of a downtown building.

I’ve been at Yahoo! for a while and I’m used to hacking in 24 hour competitions (hack days) but this time we had 48 hours. It turns out that makes a massive difference in both good and bad ways. Firstly in a 24 hour project the obvious way to get that little extra boost is to code all night. This is notpossible in a 48 hour contest, at least if you want to write code that works. However, because we all planned to go somewhere to sleep I didn’t think our team was as gelled as some of the 24 hour projects, because there wasn’t the same kind of urgency throughout. This really manifested itself in how the team interacted with the outside world. It’s much harder to shut out the world for a weekend than it is for 24 hours. The two of us in relationships left hacking for hours at a time to see our significant others. While this isn’t a bad thing in general I don’t think it helped us hitting a fully feature complete product.

I am however really happy with what we built. We aimed for something unreasonably ambitious and I feel like we managed only achieve something damn good. Which is fine by me.

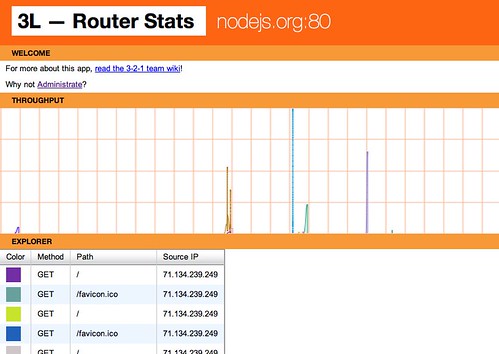

What we built was an reverse HTTP proxy; an HTTP router. What’s different about our project is the real-time reporting and the ability to create on the fly routes and access rules in JavaScript. Let me break each of those down a little bit more.

When you visit a route, for example demo2.ko-3-2-1.no.de, the proxy will round-robin your connection to all of the upstream hosts associated with that route. In the case of demo2 that meanssports.yahoo.com and yahoo.com. In the simplest case you now have an HTTP load balancer. Since Node.js is significantly faster than most web servers it would be feasible for many people to simply insert our proxy in front of their existing infrastructure.

What would this get them other than load balancing? One of the other features we added was a rich API including a web-socket which streams the connection data. This can be seen on the admin interface we mocked up to demonstrate what the proxy is doing. An HTML5 canvas based graph is connected to the web-socket and streams the events as they happen. We also load the details of each request into a YUI3 datatable so you can see exactly what is being requested.

I also made a screencast showing the interface in action.

The final and least obvious feature (because we didn’t have time to write an interface for it) is the ability to add dynamic rules via the API. This is a really powerful and useful feature. Let me give an example:

var hardBlockedIps = [];hardBlockedIps.push({ip: '127.0.0.1', undefined}); // block 127.0.0.1 foreverhardBlockedIps.push({ip: '10.0.0.1', 1283223005549}); // block 10.0.0.1 until time stamp expiresfor (var i=0;i<hardBlockedIps.length;i++){ if (req.connection.remoteAddress === hardBlockedIps[i].ip) { if (hardBlockedIps[i].expires !== undefined && (new Date).getTime() > hardBlockedIps[i].expires) { console.log("Hard Block on IP " + hardBlockedIps[i].ip + " expired"); break; } else { req.connection.end(); res.end(); console.log("Hard Blocked IP " + hardBlockedIps[i].ip); router.stopPropegation('clientReq'); router.preventDefault('clientReq'); break; } }}This function when attached to the

clientReq event in the router will “hard block” any requests from IP addresses in the array. An interesting characteristic is that because this is attached to the event which accepts the initial request from the client we can kill the connection off before we even connect to the downstream host. Not only do we not respond with an HTTP request (we simply terminate the TCP connection) we also tell the router not to process any more rules by calling the functions router.stopPropergation(event) and router.preventDefault(event).It is also easy to implement a soft block which actually returns an HTTP 503 status instead of closing the TCP stream, or to use more complex lookup rules than finding from an array. This is definitely a part of the admin interface we will be expanding.

And that brings me to my final point about competing. 48 hours is big enough that you can bite off something challenging, and fail. Not in the sense that we didn’t build something great, I think we did, but it’s not complete. I have a list of things I’m chomping at the bit to add to this. By challenging ourselves with a tough projects we’ve set ourselves up for success in the future (and hopefully in the project) because this is a very useful system that now I have some momentum to really build into something that will be used every day.

0 komentar:

Post a Comment